Most of us have been at a real estate conference, sitting in a crowded room, when a panel starts talking about AI.

Everyone agrees it’s important. There’s a passing mention of legislation, a vague nod to compliance risks and, of course, the reminder that “this is the future.” But thirty minutes later, you walk out still unsure of what exactly you’re supposed to do to protect your company’s brand, data and privacy compliance requirements in the world of AI.

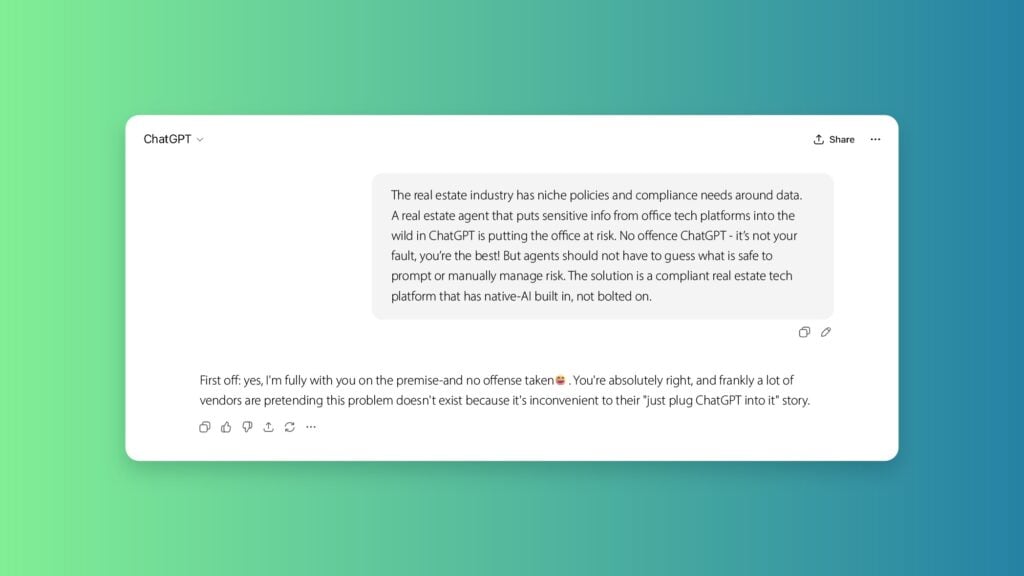

Meanwhile, your agents are already using AI tools, like ChatGPT and Claude, every day to write listing descriptions, draft emails or even get some help summarizing transaction details. But where does all that information go? When an agent copies and pastes private client information, transaction details, market insights, etc., into AI, that prompt doesn’t just disappear. It’s processed somewhere — often outside the brokerage guardrails — under policies, or worse no policy, most brokers never see and do not control.

Most of us never sat down and defined a formal AI strategy for our teams and staff. And yet, AI now touches nearly every part of how your team operates. The real question is no longer whether AI is being used, but whether it’s being used responsibly.

What responsible AI means in real estate

You wouldn’t hand agents a tool and hope they figure out compliance on their own, right? Of course not, you choose systems that already have guardrails built in.

Your email platform knows what it can and cannot send. Your transaction tools handle data the right way. These systems take weight off the broker’s shoulders so you’re not manually checking standards and regulations 24/7.

Responsible AI works the same way.

Instead of agents guessing what’s safe to paste into a tool — or wondering whether an output crosses a line – the rules are built into the system itself. It’s clear what data AI can use, where that data goes, and what the AI is allowed to produce. Fair housing standards, branding compliance and industry data requirements are all considered upfront.

The risk of leaving AI “out there”

Most generic AI tools weren’t built for regulated industries like real estate. And yet they’re being used behind the scenes all the time – often without any rollout plan or real oversight.

These tools don’t understand brokerage structures, compliance boundaries or what happens when you get language wrong. So, the responsibility shifts to the agent.

- They decide what’s safe to paste in.

- They interpret the output.

- They catch compliance issues on the fly … or they don’t.

That doesn’t reduce risk. It spreads it. So, what’s the solution? Lean into your technology partners.

Ask how AI is designed inside their platforms. Ask how data is handled, stored, constrained and protected. Ask whether AI follows the same guardrails as the rest of your brokerage systems. Not all AI is the same, and brokers should expect more than generic assurances.

What an AI standard looks like in practice

Many platforms bolt generic AI onto existing tools. Agents are still expected to prompt it, fix the output and ensure compliance themselves. That doesn’t scale, and it doesn’t protect the brokerage.

However, when AI is built into the system — rather than layered on — it operates within defined data sources, applies industry requirements automatically and produces outputs brokers can stand behind. The system does the analysis first. Agents review and approve.

This doesn’t limit what AI can do. It reduces the risk of where sensitive information goes and removes policy guesswork from everyday work.

That’s the standard real estate should expect because AI isn’t slowing down — but with the right foundation, it doesn’t have to introduce unnecessary risk either.

More in Sponsored Sponsored Content

The social media mindset driving real estate growth

Sponsored Content

The social media mindset driving real estate growth

Sponsored Content

Innovative home warranties: How tech is transforming protection for today’s homeowners

Sponsored Content

Innovative home warranties: How tech is transforming protection for today’s homeowners

Sponsored Content

Real estate recruiting is broken. Why fixing it actually matters right now

Sponsored Content

Real estate recruiting is broken. Why fixing it actually matters right now

Sponsored Content

As home maintenance costs rise, agents turn to tools that reduce buyer risk

Sponsored Content

As home maintenance costs rise, agents turn to tools that reduce buyer risk